Cloud challenges

Overview

Teaching: 10 min

Exercises: 60 minQuestions

How to adapt the workflow to my needs?

How to get my own code in the processing step?

How to change the resource requests for a workflow step?

Objectives

Rehearse getting back to the cluster

Exercise adapting the example workflow

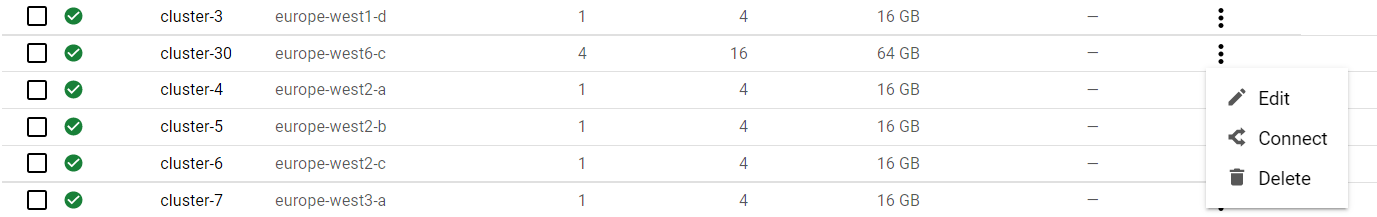

Getting back to your cluster

Get to your cluster through the Google Kubernetes Engine page page.

Select your cluster and connect.

Open the cloud shell. The files that you had in the cloud shell are still available, but you will need to copy the argo executable to the path:

# Move binary to path

sudo cp ./argo-linux-amd64 /usr/local/bin/argo

# Test installation

argo version

Challenges

Choose one or more of the following challenges:

Challenge 1

Change the workflow to run over

/SingleElectron/Run2015D-08Jun2016-v1/MINIAODSolution

- Find the dataset and identify its

recid. Use the search facets to find all MINIAOD collision datasets from 2015.- Change the input recid value in

argo_poet.yaml.

Challenge 2

Change the resource request to better match our cluster configuration.

If we look at the cost optimization of the cluster, we see that the choice of

750mfor the CPU request was not optimal:

Solution

- Read the documentation about kubernetes resource requests.

- Choose a different value for the resource request

cpu: 750minargo_poet.yamland see how it changes the scheduling and resource usage.- You can see the nodes on which the pods run with

kubectl get pods -n argo -o wide- You can see the resource usage of the nodes with

kubectl top nodesand that of pods withkubectl top pods -n argo

Challenge 3

Change the processing step to use your own selection. For example, you may not want to have the PF candidate collection (

packedPFCandidates) included because it makes the output file very large.Solution

- Make your own fork of the POET repository and move to

odws2023branch.- Modify the configuration file

PhysObjectExtractor/python/poet_cfg_cloud.py: removeprocess.mypackedcandidatefrom the twocms.Pathat the end of the file.- Change the runpoet step in the workflow file to clone from your repository.

Challenge 4

Change the analysis step to plot different values. For example, you may want to plot some values from the PF candidate collection, such as their number and the pdg id’s.

Solution

- Make a fork of the POET repository and move to

odws2023branch.- Modify the analysis script

PhysObjectExtractor/cloud/analysis.Cfor your needs.- Change the analysis step to your code.

- You can use this simple plotter as an example.

- If you do not intend to change the actual processing, it is enough to have that file alone in a repository (or any other location from which you can get it with

wgetorcurl -LO), it does not need to be a fork from the POET repository.- Remember that you need to use the GitHub

rawview to get the file if you are not cloning the repository.- Change the analysis step in the workflow file to get the file from your repository.

Key Points

Workflows can be written in a way to allow changes through input parameters

The processing and analysis steps can clone your code from git repositories