Content from Introduction

Last updated on 2024-06-19 | Edit this page

Estimated time: 5 minutes

Overview

Questions

- What is docker?

- What is the point of these exercises?

Objectives

- Learn about Docker and why we’re using it

What is Docker?

Let’s learn about Docker and why we’re using it!

Regardless of what you encounter in this lesson, the definitive guide is any official documentation provided by Docker.

From the Docker website

A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.

In short, Docker allows a user to work in a computing environment that is well defined and has been frozen with respect to interdependent libraries and code and related tools. The computing environment in the container is separate and independent from your own working area. This means that you do not have to worry about software packages that you might already have installed with a different version.

What can I learn here?

As much as we’d like, we can’t give you a complete overview of Docker. However, we do hope to explain why we run Docker in the way we do so that you gain some understanding. More specifically, we’ll be showing you how to set up Docker for not just this workshop, but for interfacing with the CMS open data in general

- Docker is a set of products to deliver and run software in packages called containers.

- Software containers are widely used these days in both industry and academic research.

- We use software containers during the hands-on sessions to provide the a well-defined software environment for exercises.

Content from Installing docker

Last updated on 2024-06-25 | Edit this page

Estimated time: 35 minutes

Overview

Questions

- How do you install Docker?

- What are the main Docker concepts and commands I need to know?

Objectives

- Install Docker

- Test the installation

- Learn and exercise the basic commands.

Installing docker

Go to the offical Docker site and their installation instructions to install Docker for your operating system.

For our purposes, we need docker-engine which is a free open-source software. Note that you can choose to install Docker Desktop (free for single, non-commercial use), but in our instructions, we do not rely on the Graphical UI that it offers.

Windows users:

In the episodes of this lesson that follow, we assume that Windows users have WSL2 activated with a Linux bash shell (e.g. Ubuntu). All commands indicated with “bash” are expected to be typed in this Linux shell (not in git bash or power shell).

Testing

As you walk through their documentation, you will eventually come to

a point where you will run a very simple test, usually involving their

hello-world container.

You can find their documentation for this step here.

Testing their code can be summed up by the ability to run (without generating any errors) the following commands.

Important: Docker postinstall to avoid sudo for Linux installation

If you need sudo to run the command above, make sure to

complete these

steps after installations, otherwise you will run into trouble with

shared file access later on. Guaranteed!!

In brief:

Then close the shell and open a new one. Verify that you can run

docker run hello-world without sudo.

Images and Containers

As it was mentioned above, there is ample documentation provided by Docker official sites. However, there are a couple of concepts that are crucial for the sake of using the container technology with CMS open data: container images and containers.

One can think of the container image as the main ingredients for preparing a dish, and the final dish as the container itself. You can prepare many dishes (containers) based on the same ingredients (container image). Images can exist without containers, whereas a container needs to run an image to exist. Therefore, containers are dependent on images and use them to construct a run-time environment and run an application.

The final dish, for us, is a container that can be thought of as an isolated machine (running on the host machine) with mostly its own operating system and the adequate software and run-time environment to process CMS open data.

Docker provides the ability to create, build and/or modify images, which can then be used to create containers. For the MC generator, ML learning and CMS open data lessons, we will use already-built and ready-to-use images in order to create our needed container, but we will exercise building images with some additional code later on during the Midsummer QCD school hands-on sessions.

Commands Cheatsheet

There are many Docker commands that can be executed for different tasks. However, the most useful for our purposes are the following. We will show some usage examples for some of these commands later. Feel free to explore other commands.

Create and start a container based on a specific image

This command will be used later to create our CMS open data container.

The option -v for mounting a directory from the local

computer to the container will also be used so that you can edit files

on your normal editor and used them in the container:

Copy files in or out of a container

- For up-to-date details for installing Docker, the official documentation is the best bet.

- Make sure you were able to download and run Docker’s

hello-worldexample. - The concepts of image and container, plus the knowledge of certain Dockers commands, is all that is needed for the hands-on sessions.

Content from Docker containers for CMS open data

Last updated on 2024-06-26 | Edit this page

Estimated time: 35 minutes

Overview

Questions

- What container images are available for my work with the CMS open data?

Objectives

- Download the ROOT and python images and build your own container

- Restart an existing container

- Learn how to share a working area between your laptop and the container image.

Overview

This exercise will walk you through setting up and familiarizing yourself with Docker, so that you can effectively use it to interface with the CMS open data. It is not meant to completely cover containers and everything you can do with Docker.

For CMS open data work, three types of container images are provided: one with the CMS software (CMSSW) compatible with the released data, and two others with ROOT and python libraries needed in this workshop.

You will only need the CMSSW container, if you want to access the CMS data in the MiniAOD format (you will learn about them later). Access to it requires CMSSW software that you will not be able to install on your own computer.

During the workshop hands-on lessons, we will be using the CMS data the NanoAOD format and access to it does not require any CMS-specifc software. Two containers are provided to make setting up and using ROOT and/or python libraries easier for you for this tutorial, but if you wish, you can also install them on your computer.

All container images come with VNC for the graphical use interface. It opens directly in a browser window. Optionally, you can also connect to the VNC server of the container using a VNC viewer (VNC viewer (TigerVNC, RealVNC, TightVNC, OSX built-in VNC viewer, etc.) installed on your local machine, but only the browser option for which no additional tools are needed is described in these instructions. On native Linux, you can also use X11-forwarding.

For different container images, some guidance can be found on the Open Data Portal introduction to Docker. The use of graphical interfaces, such the graphics window from ROOT, depends on the operating system of your computer. Therefore, in the following, separate instructions are given for Windows WSL, Linux and MacOS.

Start the container

The first time you start a container, a docker image file gets downloaded from an image registry. The open data images are large (2-3GB for the root and python tools images and substantially larger for the CMSSW image) and it may take long to download, depending on the speed of your internet connection. After the download, a container created from that image starts. The image download needs to be done only once. Afterwards, when starting a container, it will find the downloaded image on your computer, and it will be much faster.

The containers do not have modern editors and it is expected that you mount your working directory from the local computer into the container, and use your normal editor for editing the files. Note that all your compiling and executing still has to be done in the Docker container!

First, before you start up your container, create a local directory

where you will be doing your code development. In the examples below, it

is called cms_open_data_python and

cms_open_data_root, respectively, and the variable will

record your working directory path. You may choose a different location

and a shorter directory name if you like.

Python tools container

Create the shared directory in your working area:

Start the container with

BASH

docker run -it --name my_python -P -p 8888:8888 -v ${workpath}/cms_open_data_python:/code gitlab-registry.cern.ch/cms-cloud/python-vnc:python3.10.5You will get a container prompt similar this:

OUTPUT

cmsusr@4fa5ac484d6f:/code$This is a bash shell in the container. If you had some files in the shared area, they would be available here.

You can now open a jupyter lab from the container prompt with

and open the link that is printed out in the message.

Link does not work?

Try replacing 127.0.0.1 in the link with

localhost.

Click on the Jupyter notebook icon to open a new notebook.

Permission denied?

If a window with “Error Permission denied: Untitled.ipynb” pops up,

you most likely forgot to define the path variable, to create the shared

directory or to change its permissions. Exit the jupyter lab with

Control-C and confirm with y, then type exit to stop the

container. Remove the container with

and now start all over from the start.

Close the jupyter lab with Control-C and confirm with y. Type

exit to leave the container:

Root tools container

Create the shared directory in your working area:

Then start the container, depending on your host system:

BASH

docker run -it --name my_root -P -p 5901:5901 -p 6080:6080 -v ${workpath}/cms_open_data_root:/code gitlab-registry.cern.ch/cms-cloud/root-vnc:latestYou will get a container prompt similar this:

OUTPUT

cmsusr@9b182de87ffc:/code$For graphics, use VNC that is installed in the container and start

the graphics windows with start_vnc. Open the browser

window in the address given at the start message (http://127.0.0.1:6080/vnc.html) with the default VNC

password is cms.cern. It shows an empty screen to start

with and all graphics will pop up there.

You can test it with ROOT:

Open a ROOT Object Browser by typing

TBrowser tin the ROOT prompt.

You should see it opening in the VNC tab of your browser.

Exit ROOT with

.qin the ROOT prompt.

Type exit to leave the container, and if you have

started VNC, stop it first:

BASH

docker run -it --name my_root --net=host --env="DISPLAY" -v $HOME/.Xauthority:/home/cmsusr/.Xauthority:rw -v ${workpath}/cms_open_data_root:/code gitlab-registry.cern.ch/cms-cloud/root-vnc:latestYou will get a container prompt similar this:

OUTPUT

cmsusr@9b182de87ffc:/code$For graphics, X11-forwarding to your host is used.

You can test it with ROOT:

Open a ROOT Object Browser by typing

TBrowser tin the ROOT prompt.

You should see the ROOT Object Broswer opening.

Exit ROOT with

.qin the ROOT prompt.

Type exit to leave the container:

Exercises

Challenge 1

Create a file on your local host and make sure that you can see it in the container.

Open the editor in your host system, create a file

example.txt and save it to the shared working area, either

in cms_open_data_python or in

cms_open_data_root.

Restart the container, making sure to choose the container that is connected to shared working area that you have chosen:

or

In the container prompt, list the files and show the contents of the newly created file:

Challenge 2

Make a plot with the jypyter notebook, save it to a file and make sure that you get it to your host

Restart the python container with

Start the jypyter lab with

Open a new jupyter notebook.

Make a plot of your choice. For a quick CMS open data plot, you can use the following:

PYTHON

import uproot

import matplotlib.pylab as plt

import awkward as ak

import numpy as np

# open a CMS open data file, we'll see later how to find them

file = uproot.open("root://eospublic.cern.ch//eos/opendata/cms/Run2016H/SingleMuon/NANOAOD/UL2016_MiniAODv2_NanoAODv9-v1/120000/61FC1E38-F75C-6B44-AD19-A9894155874E.root")

file.classnames() # this is just to see the top-level content

events = file['Events']

# take all muon pt values in an array, we'll see later how to find the variable names

pt = events['Muon_pt'].array()

plt.figure()

plt.hist(ak.flatten(pt),bins=200,range=(0,200))

plt.show()

plt.savefig("pt.png") Run the code shell, and you should see the muon pt plot.

You can rename your notebook by right-clicking on the name in the left bar and choosing “Rename”.

In a shell on your host system, move to the working directory, list the files and you should see the notebook and the plot file.

OUTPUT

myplot.ipynb pt.pngChallenge 3

Make a plot with ROOT, save it to a file and make sure that you get it to your host

Restart the root container with

If you are using VNC, start it in the container prompt with

Open the URL in your browser and connect with the password

cms.cern.

Start ROOT and make a plot of your choice.

For a quick CMS open data plot, you can open a CMS open data file with ROOT with

BASH

root root://eospublic.cern.ch//eos/opendata/cms/Run2016H/SingleMuon/NANOAOD/UL2016_MiniAODv2_NanoAODv9-v1/120000/61FC1E38-F75C-6B44-AD19-A9894155874E.rootIn the ROOT prompt, type

TBrowser tto open the ROOT object browser, which opens in your broswer VNC tab.

Double click on the file name, then on Events and then a

variable of your choice, e.g. nMuon

You should see the plot. To save it, right click in the plot margins

and you will see a menu named TCanvas::Canvas_1. Choose

“Save as” and give the name, e.g. nmuon.png.

Quit ROOT by typing .q on the ROOT prompt or choosing

“Quit Root” from the ROOT Object Browser menu options.

In a terminal on your host system, move to the working directory, list the files and you should see the plot file.

OUTPUT

nmuon.png- You have now set up a docker container as a working enviroment for CMS open data.

- You know how to pass files between your own computer and the container.

- You know how to open a graphical window of ROOT or a jupyterlab in your browser using software installed in the container.

Content from Docker containers for Monte Carlo generators and Rivet

Last updated on 2024-06-25 | Edit this page

Estimated time: 35 minutes

Overview

Questions

- What container images are available for MC generators ?

Objectives

- Download a container image with Pythia and Rivet

- Learn how to use the container

Overview

You will learn about Rivet during this QCD school. The Rivet project provides container images with Rivet and Monte Carlo generators included. For now, we will download the Rivet container images with Pythia and Sherpa code included.

Rivet containers

The first time you start a container, a docker image file gets downloaded from an image registry. The Rivet image sizes are of order of 3 GB and it may take some time to download, depending on the speed of your internet connection. After the download, a container created from that image starts. The image download needs to be done only once. Afterwards, when starting a container, it will find the downloaded image on your computer, and it will be much faster.

The containers do not have modern editors and it is expected that you mount your working directory from the local computer into the container, and use your normal editor for editing the files. Note that all your compiling and executing still has to be done in the Docker container!

First, before you start up your container, create a local directory

where you will be doing your code development. In the examples below, it

is called pythia_work or sherpa_work. You may

choose a different directory name if you like.

Start the Pythia container

Create the shared directory in your working area:

Start the container with

This will share you current working directory ($PWD)

into the container’s /host directory.

The flag --rm makes docker delete the local container

when you exit and every time you run this command it creates a new

container. This means that changes done anywhere else than in the shared

/host directory will be deleted.

Note that the --rm flag does not delete the image that

you just downloaded.

You will get a container prompt similar this:

OUTPUT

root@3bbca327eda3:/work#This is a bash shell in the container. Change to the shared

/host directory.

You will be doing all your work in this shared directory. You can

also set this directory as the working directory with flag

-w /host in the docker run... command.

For now, exit from the container:

List your local Docker images and containers and see that you have

the hepstore/rivet-pythia image but no local containers as

they were deleted with the --rm flag.

Start the Sherpa container

Move back from the pythia working area with

Create the shared directory in your working area:

Start the container with

This will share you current working directory ($PWD)

into the container’s /host directory.

The flag --rm makes docker delete the local container

when you exit and every time you run this command it creates a new

container. This means that changes done anywhere else than in the shared

/host directory will be deleted.

Note that the --rm flag does not delete the image that

you just downloaded.

You will get a container prompt similar this:

OUTPUT

root@2560cb95ef24:/host#The flag -w /host has set /host as the

working directory. You will be doing all your work in this shared

directory.

For now, exit from the container:

Test the environment

Challenge 1

Create a file on your local host and make sure that you can see it in the container.

Open the editor in your host system, create a file

example.txt and save it to the shared working area in

pythia_work.

Start a container in your shared pythia_work

directory:

In the container prompt, list the files and show the contents of the newly created file:

Challenge 2

Create a file in the container and make sure that you can see it on your local area.

If you are not already in the container, start it with

Write some output to a file

Exit from the container and see that you have the output file on your local area.

Challenge 3

Check the Rivet version in the Sherpa container.

Challenge 4

In the Pythia container, copy a Pythia example code

main01.cc, and Makefile and

Makefile.inc from

/usr/local/share/Pythia8/examples/ of the container to your

shared area. Compile the code with make and run it.

Start the Pythia container in your shared pythia_work

directory:

Copy the files to your working area with

BASH

cp /usr/local/share/Pythia8/examples/main01.cc .

cp /usr/local/share/Pythia8/examples/Makefile* .Compile and run

You will get a particle listing and a lovely ASCII histogram of charged multiplicity.

- You have now set up a docker container as a working enviroment Rivet and Pythia.

- You know how to share a working area between your own computer and the container.

Content from Building your own docker image

Last updated on 2025-01-22 | Edit this page

Estimated time: 30 minutes

Overview

Questions

- What if the container image does not have what you need installed?

Objectives

- Learn how to build a container image from an existing image

- Use the container image locally

Overview

You might be using a container image, but it does not have everything you need installed. Therefore, every time you create a container from that image, you will need to install something in it. To save time, you can build an image of your own with everything you need.

Building an image from an existing image is easy and can be done with

docker commands.

We will now exercise how to do it, first with a simple example and then with a container image that we have been using in the exercises.

But first, let us see where we get the images that we have been using so far.

Container image registries

Container images are available from container registries. In the previous episodes, we have used the following images:

hello-worldgitlab-registry.cern.ch/cms-cloud/python-vnc:python3.10.5gitlab-registry.cern.ch/cms-cloud/root-vnc:latesthepstore/rivet-pythiahepstore/rivet-sherpa:3.1.7-2.2.12

The syntax for the image name is:

[registry_name]/[repository]/image_name:[version]

The registry name is like a domain name. If no

registry name is given, the default location is the docker image

registry: Docker Hub, this is the

case for hello-world and the MC generator images. CMS open

data images come from the container image registry of a CERN GitLab

project.

The repository is a collection of container images

within the container registry. For the MC generator images, it is

hepstore in Docker Hub, for the CMS open data images, it is

cms-cloud in the CERN GitLab.

A version tag comes after :. If no

value is given, the latest version is taken. Also, :latest

takes the most recent tag. Note that versions may change, and it is good

practice to specify the version.

Building an image

We will use a small Python image as a base image and add one Python package in it.

Python container images are available from the Docker Hub: https://hub.docker.com/_/python

There’s a long list of them, and we choose a tag with

- “slim” to keep it small and fast

- “bookworm” for a stable Debian release.

The recipe for the image build is called Dockerfile.

Create a new working directory, e.g. docker-image-build and

move to it.

Choose an example Python package to install, for example emoji.

Create a new file named Dockerfile with the following

content

FROM python:slim-bookworm

RUN pip install emojiBuild a new image with

The command docker build uses Dockerfile

(in the directory in which it is run) to build an image with name

defined with --tag. Note the dot (.) at the end of the

line.

OUTPUT

[+] Building 37.2s (7/7) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 85B 0.0s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/python:slim-bookworm 5.3s

=> [auth] library/python:pull token for registry-1.docker.io 0.0s

=> [1/2] FROM docker.io/library/python:slim-bookworm@sha256:da2d7af143dab7cd5b0d5a5c9545fe14e67fc24c394fcf1cf15 23.1s

=> => resolve docker.io/library/python:slim-bookworm@sha256:da2d7af143dab7cd5b0d5a5c9545fe14e67fc24c394fcf1cf15e 0.0s

=> => sha256:da2d7af143dab7cd5b0d5a5c9545fe14e67fc24c394fcf1cf15e8ea16cbd8637 9.12kB / 9.12kB 0.0s

=> => sha256:daab4fae04964d439c4eed1596713887bd38b89ffc6bdd858cc31433c4dd1236 1.94kB / 1.94kB 0.0s

=> => sha256:d9711c6b91eb742061f4d217028c20285e060c3676269592f199b7d22004725b 6.67kB / 6.67kB 0.0s

=> => sha256:2cc3ae149d28a36d28d4eefbae70aaa14a0c9eab588c3790f7979f310b893c44 29.15MB / 29.15MB 15.7s

=> => sha256:07ccb93ecbac82807474a781c9f71fbdf579c08f3aca6e78284fb34b7740126d 3.32MB / 3.32MB 3.4s

=> => sha256:aaa0341b7fe3108f9c2dd21daf26baff648cf3cef04c6b9ee7df7322f81e4e3a 12.01MB / 12.01MB 10.0s

=> => sha256:c9d4ed0ae1ba79e0634af2cc17374f6acdd5c64d6a39beb26d4e6d1168097209 231B / 231B 4.4s

=> => sha256:b562c417939b83cdaa7dee634195f4bdb8d25e7b53a17a5b8040b70b789eb961 2.83MB / 2.83MB 8.6s

=> => extracting sha256:2cc3ae149d28a36d28d4eefbae70aaa14a0c9eab588c3790f7979f310b893c44 4.3s

=> => extracting sha256:07ccb93ecbac82807474a781c9f71fbdf579c08f3aca6e78284fb34b7740126d 0.3s

=> => extracting sha256:aaa0341b7fe3108f9c2dd21daf26baff648cf3cef04c6b9ee7df7322f81e4e3a 1.3s

=> => extracting sha256:c9d4ed0ae1ba79e0634af2cc17374f6acdd5c64d6a39beb26d4e6d1168097209 0.0s

=> => extracting sha256:b562c417939b83cdaa7dee634195f4bdb8d25e7b53a17a5b8040b70b789eb961 0.8s

=> [2/2] RUN pip install emoji 8.3s

=> exporting to image 0.2s

=> => exporting layers 0.1s

=> => writing image sha256:f784d45ea915a50d47b90a3e2cdd3a641c1fd75bc57725f2fb5325c82cf66d1b 0.0s

=> => naming to docker.io/library/python-emoji 0.0s

What's Next?

View a summary of image vulnerabilities and recommendations → docker scout quickviewYou can check with docker images that the image has

appeared in the list of images.

Using the image locally

Start the container with

The flag --rm makes docker delete the local container

when you exit.

It will give you a Python prompt.

Test that the Python package is in the container

Read from the emoji package documentation how to use it and test that it works as expected.

OUTPUT

Python 3.12.4 (main, Jun 27 2024, 00:07:37) [GCC 12.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from emoji import emojize as em

>>> print(em(":snowflake:"))

❄️Exit with exit() or Ctrl-d.

Inspect the container from the bash shell

Start the container bash shell and check what Python packages are installed.

Hint: use /bin/bash in the docker run...

command.

Start the container in its local bash shell:

Use pip list to see the Python packages:

OUTPUT

root@aef232a1babe:/# pip list

Package Version

----------------- -------

emoji 2.12.1

pip 24.0

setuptools 70.1.1

typing_extensions 4.12.2

wheel 0.43.0

[notice] A new release of pip is available: 24.0 -> 24.1.1

[notice] To update, run: pip install --upgrade pip

It is often helpful to use bash shell to inspect the contents of a container.

Exit from the container with exit.

Run a single Python script in the container

Sometimes, it is convenient to pass a single Python script to the container. Write an example Python script to be passed into the container.

Make a new subdirectory (you can name it test) and save

the Python code in a file (name it myscript.py):

from emoji import emojize as em

print(em(":ghost:"))Now, you must pass this script into the container using the

-v flag in the docker run ... command. We know

to use the container directory /usr/src from the Python

container image usage

instructions and we choose to name the container directory

mycode.

You can use the -w to open the container in that

directory.

BASH

docker run -it --rm -v "$PWD"/test:/usr/src/mycode -w /usr/src/mycode python-emoji python myscript.py👻

Make the Python script configurable

Make the Python script configurable so that you can use any of the available emojis. I’m sure you have tried a few already 😉

Save the following a file (e.g. printme.py) in the

test directory:

from emoji import emojize as em

import sys

if __name__ == "__main__":

anemoji = sys.argv[1]

print(em(":"+anemoji+":"))Run this with

BASH

docker run -it --rm -v "$PWD"/test:/usr/src/mycode -w /usr/src/mycode python-emoji python printme.py sparkles✨

As the docker command has become long, you could define a short-cut, an “alias”. First, verify your existing aliases:

If printme is not in use already, define it as alias to

the long command above:

BASH

alias printme="docker run -it --rm -v "$PWD"/test:/usr/src/mycode -w /usr/src/mycode python-emoji python printme.py"Now try:

🎉

Have fun!

Add code to the image

If the code that you use in the container is stable, you can add it to the container image.

Create a code subdirectory and copy our

production-quality printme.py script in it.

Modify the Dockerfile to use /usr/src/code

as the working directory and copy the local code

subdirectory into the container (the dot brings it to the working

directory defined in the previous line):

FROM python:slim-bookworm

RUN pip install emoji

WORKDIR /usr/src/code

COPY code .Build the image with this new definition. It is a good practice to use version tags:

Inspect the new container

Open a bash shell in the container and check if the file is present

Run the script

Run the script that is now in the container.

More complex Dockerfiles

Check if you can make sense of more complex Dockerfiles bases on what you learnt from our simple case.

Explore Dockerfiles

Explore the various hepforge Dockerfiles. You can find them under the MC generator-specific directories in the HEPStore code repository.

You can start from the rivet-pythia image Dockerfile.

Find the familiar instructions FROM, RUN,

COPYand WORKDIR.

Observe how the image is built FROM an existing

image

FROM hepstore/rivet:${RIVET_VERSION}Find the Dockerfile for that base image: rivet Dockerfile.

See how it is built FROM another base image

FROM hepstore/hepbase-${ARCH}-latexand explore a hepbase Dockerfile.

Build a Machine Learning Python image

In the Machine Learning hands-on session, the first thing to do was to install some additional packages in the Python container. Can you build a container image with these packages included?

Build a new Python image with the ML packages

Build a new Machine Learning Python image with the ML Python packages included. Use as the base image the new tag python3.10.12 that includes a package needed for the ML exercises to work.

The container image FROM which you want to build the new

image is

gitlab-registry.cern.ch/cms-cloud/python-vnc:python3.10.12

which has an update kindly provided for the ML exercise to work.

The Python packages were installed with

BASH

pip install qkeras==0.9.0 tensorflow==2.11.1 hls4ml h5py mplhep cernopendata-client pydot graphviz fsspec-xrootd

pip install --upgrade matplotlibNote that in the new image, you need to add

fsspec-xrootd to the Python packages to install.

When you run the upgrade for matplotlib, you might have

noticed the last line of the output

Successfully installed matplotlib-3.9.1. So we pick up that

version for matplotlib.

Write these packages in a new file called

requirements.txt:

qkeras==0.9.0

tensorflow==2.11.1

hls4ml

h5py

mplhep

cernopendata-client

pydot

graphviz

matplotlib==3.9.1

fsspec-xrootd==0.3.0In the Dockerfile, instruct Docker to start FROM the

base image, COPY the requirements file in the image and

RUN the pip install ... command:

FROM gitlab-registry.cern.ch/cms-cloud/python-vnc:python3.10.12

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txtBuild the image with

Start a new container from this new image and check with

pip list if the packages are present. Since you do not need

to install anything in the container, you can as well remove it after

the start with the flag --rm (in the similar way as it was

done with the MC generator containers):

What can go wrong?

Can you spot a problem in the way the we built the image? What will happen if you use the same Dockerfile in some months from now?

We defined some of the Python packages without a version tag. The current versions will get installed. That’s fine if you intend to use them right now just once.

But check the release history of the packages at

https://pypi.org/project/<package>/#history. You will

notice that some of them get often updated.

While the container that you start from this image will be the same, it may well be that next time you build the image with this Dockerfile, you will not get the same packages.

It is always best to define the package versions. You can find the

current versions from the listing of pip list. Update

requirements.txt to

qkeras==0.9.0

tensorflow==2.11.1

hls4ml==0.8.1

h5py==3.11.0

mplhep==0.3.50

cernopendata-client==0.3.0

pydot==2.0.0

graphviz==0.20.3

matplotlib==3.9.1

fsspec-xrootd==0.3.0Learn more

Learn more about Dockerfile instructions in the Dockefile reference.

Learn more about writing Dockerfiles in the HSF Docker tutorial.

Learn more about using Docker containers in the analysis work in the Analysis pipelines training.

- It is easy to build a new container image on top of an existing image.

- You can install packages that you need if they are not present in an existing image.

- You can add code and eventually compile it in the image so that it is ready to use when the container starts.

Content from Sharing your docker image

Last updated on 2024-07-05 | Edit this page

Estimated time: 30 minutes

Overview

Questions

- How to share a container image in Docker Hub?

- How to build and share a container image through GitHub

Objectives

- Learn how to push a container image to Docker Hub

- Learn how to trigger the container image build through GitHub actions and share it through a GitHub Package.

Overview

In the previous episode you learnt how to build a container image.

In this episode, we will go through two alternatives for sharing the image.

Share an image through Docker Hub

Create an account in the Docker image registry: go to Docker Hub and click on “Sign up”. This is a separate account from GitHub or anything else.

On the terminal, log in to Docker Hub:

Tag the image with your username:

Note that the ML image is big, the ML packages made it double the size of the base image!

If you want a quick exercise, use your emoji image instead:

Push the image to Docker Hub:

or, for a quick check

Once done, if your Docker Hub repository is public, anyone see your

image in https://hub.docker.com/u/<yourdockerhubname>

and can pull it from it.

Document your image

Add a description and an overview.

Find your image in

https://hub.docker.com/u/<yourdockerhubname>. Add a

brief descripion. In “Overview”, write usage instructions.

Exchange images

Try someone else’s image.

Are the instructions clear?

Build and share an image through GitHub

You can also use GitHub actions to build the image and share it through GitHub packages.

Your repository should contain a Dockerfile and all the

files needed in it.

Create a .github/workflows/ directory (with that exact

name) in your repository:

and save a file docker-build-deploy.yaml in it with the

following content:

name: Create and publish a Docker image

on:

push:

branches:

- main

env:

REGISTRY: ghcr.io

IMAGE_NAME: ${{ github.repository }}

jobs:

build-and-push-image:

runs-on: ubuntu-latest

permissions:

contents: read

packages: write

steps:

- name: Checkout repository

uses: actions/checkout@v4

- name: Log in to the Container registry

uses: docker/login-action@v3

with:

registry: ${{ env.REGISTRY }}

username: ${{ github.actor }}

password: ${{ secrets.GITHUB_TOKEN }}

- name: Docker Metadata

id: meta

uses: docker/metadata-action@v5

with:

images: ${{ env.REGISTRY }}/${{ env.IMAGE_NAME }}

- name: Build and push Docker image

uses: docker/build-push-action@v5

with:

context: .

push: true

tags: ${{ steps.meta.outputs.tags }}

labels: ${{ steps.meta.outputs.labels }}Before pushing to the GitHub repository, exclude files you do not

want to push in a .gitignore file.

Then check the status to make sure that you commit and push what you want

The add and commit

Check the remote to see that the push will go where you want

and push the changes

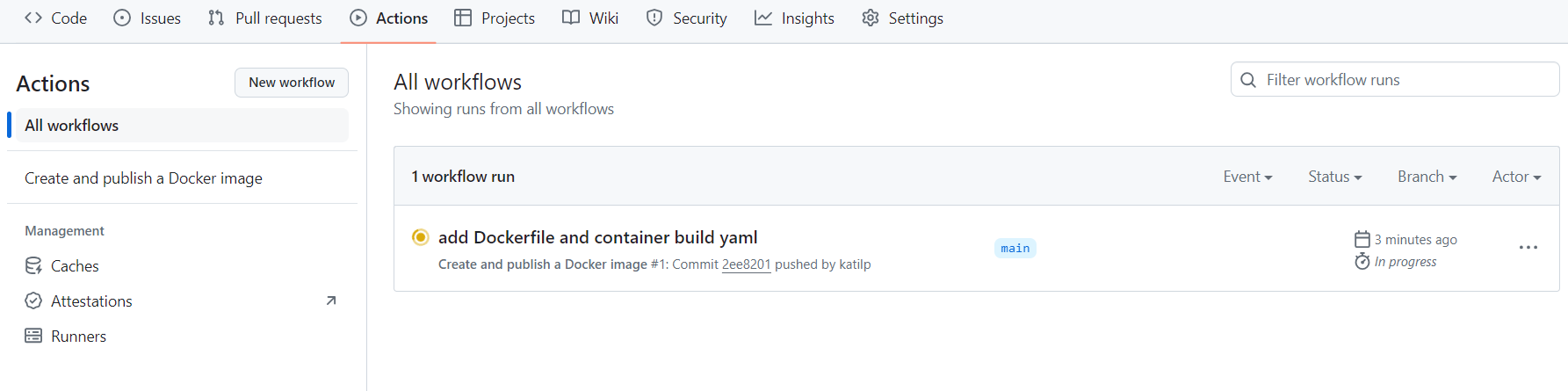

Once done, click on “Actions” in the GitHub Web UI and you will see the build ongoing:

If all goes well, after some minutes, you will see a green tick mark and the container image will be available in Packages

https://github.com/<yourgithubname>/<repository>/pkgs/container/<repository>

The image name is the same as the repository name because we have

chosen so on line 10 of the docker-build-deploy.yaml

file.

Learn more

Learn more about Dockerfile instructions in the Dockefile reference.

Learn more about writing Dockerfiles in the HSF Docker tutorial.

Learn more about using Docker containers in the analysis work in the Analysis pipelines training.

- It is easy to build a new container image on top of an existing image.

- You can install packages that you need if they are not present in an existing image.

- You can add code and eventually compile it in the image so that it is ready to use when the container starts.